Gamma:

Several authoritative and very insightful papers and web sites were written on the subject of gamma (ref). However, definitions and interpretations still remain a subject of continuing discussion. Instead of going into the merits and controversials of gamma encoding/decoding/compensation, I will focus on its principles, application, and measurement.

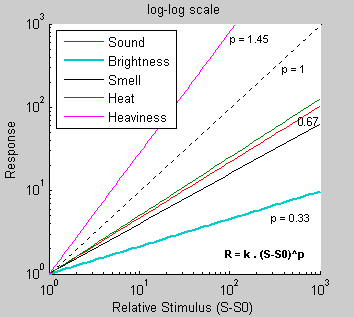

To at least introduce the topic, let's start with simple empirical observations that will lead us to more intuitive meaning of the gamma. Our everyday experience points to the fact that human senses, such as hearing, sight, smell, and heat respond and adapt to extremely wide ranges of stimuli. To cope with such a large dynamic range, our hearing system, eyes, nose, and sensory neurons in skin evaluate the corresponding stimuli on nonlinear (approximately logarithmic) scale. Simply, when we double the light intensity, we don't see the light as twice as bright! The magnitude of a physical stimulus and its perceived intensity or strength follows the Stevens' power law (Fig. 14). Stevens' formulation is widely considered to supersede the famous Weber-Fechner law on the basis that it describes a wider range of sensations. Mathematically, the Stevens' law is formulated as follows:

R = k . (S-S0)p

Logarithm of both sides of this equation leads to a linear relationship between log(S-S0) and log R, with slope of the line determined by p. Figure 14 illustrates the qualitative relationship between stimulus of the human sensor system (S) and the response that we feel (R). Dashed line (p =1) would correspond to the linear dependence.

| Figure 14: Stimulus intensity vs. objective response |

|---|

|

For example, the way we feel heaviness of an object is characterized by a high value of p, reflected in a steep curve. In other words, once a stimulus is strong enough to sense "heaviness" of the object, the perception of "heaviness" rapidly becomes stronger as the stimulus becomes stronger. The other sensory responses shown have lower p value, which means that they can cover much wider ranges of stimulus intensity (dynamic range). Perception of light brightness has particularly low power exponent, which indicates that our vision system adapts to a wide dynamic ranges of the light. We will see the coefficient 0.33 (1/3) later in the context of perceptually uniform lightness scale as defined in the CIELAB color space. The sensitivity of the human eye is influenced by the average light level to which the eye is exposed.

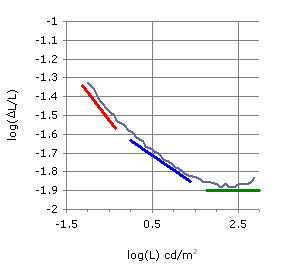

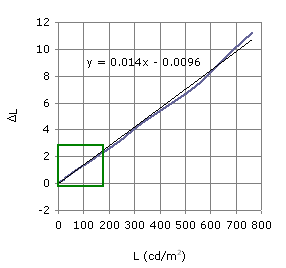

Representative result of noticeable luminance-difference measurements performed in the range of typical LCD luminances is shown in Fig. 15. The quantity log(ΔL/L) is plotted against the log(L) where L is luminance in cd/m2 (correlates with relative brightness). Some theoretical approaches predict a three-segmented curve, with slopes of -1 (the linear range), -0.5 (the square root range), and 0 (the Weber range). The same data on the linear scale is shown in Fig. 16. For luminance levels of interest (0.1 - 200 cd/m2), the ratio of ΔL/L has approximately constant value of 0.01 (so called Weber-Fechner fraction). Fit to a line with the slope of ~0.01 is remarkably good. In this region, the just noticeable difference in luminance (JND ~ ΔL) then follows the linear equation: ΔL = 0.01 * L. This relationship just confirms the empirical observation that we are more sensitive to luminance changes in the darker levels (at L = 100, JND = 1 while at L = 10, JND = 0.1). This observation will be mentioned later when gamma encoding is discussed. It is important to note that sensitivity of human vision varies with the light level and that in order for us to discriminate between two close luminance levels, one has to be fully adapted to the surround luminance. To be able to distinguish shades of gray in darker areas, our eyes have to be completely adapted to a lower intensity environment (globally as well as locally). For additional information on this topic, refer to the paper "Gamma and its disguises" of Charles Poynton and see the section "Why gamma" at Norman Koren's web pages.

| Figure 15: Log-Log scale |

|---|

|

| Figure 16: Linear scale |

|---|

|

In contrast to the human senses, linear data representation is widely used for computer generated imagery, mostly because models of illumination, white-point conversion, RGB color conversions, and color matching are defined linearly. Furthermore, the image sensors (CCD or CMOS) are linear devices that respond proportionally to the number of photons that interact with their electrode structure.

(top)↑

Quantization:

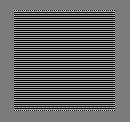

At the outset of the gamma discussion, the topic of quantization will be briefly discussed. In order to render captured scenes faithfully, digital devices have to have the ability to adequately sample (quantize) dynamic ranges of typical everyday scenes. Digital imaging systems with usually a limited number of bits (typically 8) must be designed so that there is enough precision in the low levels (shadows) to avoid visible contouring (our eyes are quite sensitive to transitions in shadow areas - Fig. 16). This can be achieved by applying a logarithmic or gamma-law "correction" to the linear data (logarithmic quantization has often been recommended but rarely implemented). Such transformations usually proceeds in two steps. First, the image is converted by analog-to-digital converter to, e.g., 12 bits in uniform steps (4,096 steps). Second, the output signal is then reduced to 8 bits by a nonlinear transformation that leads to compression of high level bins. When working with a typical LCD display that has about a 300:1 contrast ratio, our eyes can distinguish about half of the luminance levels. For 8-bit grayscale gradient, there would be theoretically 8.2 f-stops (exposure zones, log2300 = 8.2). For all 256 levels, there would be about 256/8.2 = ~ 31 distinguishable levels per a zone. As a result of interference from higher luminance levels (flare, bright environment), our capacity to distinguish levels in darker areas is compromised. Coming back to the Weber-Fechner law, the theoretical number of distinguishable levels per exposure zone is equal to log(2)/log(1.01) = 70. Number two relates to the exposure zone definition (half or double of the light intensity) and 1.01 is the 1% JND difference coming from the Weber-Fechner law. Again, this number of levels assumes complete adaptation to relatively narrow range of luminances around the evaluated neighboring levels.

Note*: if we send linearly sampled data through a power function and quantize them, we will lose certain number of levels, depending on the number of bits of input and the number of bits of output. For example if we would send 256 linearly spaced levels (8-bit encoding) through the gamma compression curve of 0.4545 ( = 1/2.2), we would lose 72 levels to give us only 184 to work with. However, if our camera uses 10 or 12 bits to represent the captured linear RAW data, the gamma compression with the same power coefficient of 0.4545 would preserve all 256 levels in 8-bit encoding. See the Bruce Lindbloom's Level Calculator for details.

The idea behind nonlinear quantization is depicted in Figure 17. The x-axis is normalized digital input such as a photon count passed through the analog-to-digital converter (ADC). Numbers just above are the input values for 8-bit encoding (typical image editing range of 0-255). Black line is the linear response of an arbitrary digital device (camera, scanner sensor). Clearly, the output (on the y-axis) is directly proportional to the input. As an example of logarithmic correction, the blue curve in Fig. 17 describes transformation from the linear (black) to a logarithmic domain. In order to avoid log troubles at 0 ![]() , transformation (I) was used instead of direct log. Dc is the digital count input and p is a parameter ∈ (0, ∞).

, transformation (I) was used instead of direct log. Dc is the digital count input and p is a parameter ∈ (0, ∞).

| y = |

|

The red curve is a "classical" simple gamma-corrected linear mapping. One can see that gamma mapping is steeper in shadows while highlights have nearly identical mapping. If we were quantizing in linear steps, we would be "wasting" bits in bright regions (remember, we can't distinguish much of the brightness level difference in lights anyway) and not having enough in areas where it matters most, i.e. darks. Here is why. For the linear scale, pixels above value of 204 (in 8-bit encoding) would require (1.0-0.8) x 100 = 20% of the available bits (same as pixels below 50). For the nonlinear scale, same regions will claim ~10% ((1.0-0.9) x 100) and 50% of available bits, respectively (orange rectangles). Read the following box and references for more examples and explanations.

i) we will assume a linear grayscale file in 8-bit encoding (total of 256 levels). Since the output luminance changes linearly with the input luminance, the first exposure zone (from 128-255) will have 128 brightness levels (256/2), the second zone (64-128) 64, and the last three dark zones (no. 6-8) will have the total of 7 levels (4+2+1).

ii) now for the gamma 2.2 encoded 8-bit grayscale image (e.g., JPG). Taking the half of the normalized luminance input will result in normalized output luminance of 0.5(1/2.2) = 0.7298 for the first exposure zone. This would consume (1-0.7298)*256 = 69 brightness levels. Second zone would have (0.7298-0.25(1/2.2))*256 = 50 levels, third 37, fourth 27, ... 20, 14, 10, .. Note that due to the power function applied on the input luminance data, the number of exposure zones is greater than 8. Clearly, the gamma encoding reallocates encoding levels from the upper exposure zones into lower zones to make the distribution of levels much more uniform. This way a serious banding at low levels is avoided. See also, "Understanding RAW files" at the Luminous Landscape and Human vision and tonal levels at Norman Koren's web page.

| Figure 17: Effect of quantization in a capture device |

|---|

|

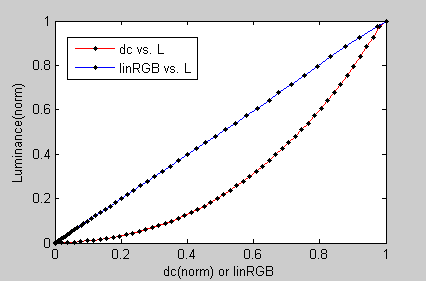

Clear benefit of these transformations is that the number of bits required to eliminate contouring of camera images in a computer display can be reduced from the "safe" 10-12 bits for linear data to 8 bits for logarithmic or gamma-corrected data (see the note above). This has beneficial impact on signal storage and transmission. Also, such nonlinear ("gamma") correction mathematically transforms the physical light intensity into a perceptually-uniform domain. Furthermore, it just happens that operating principle of CRT displays requires a gamma correction close to 1/γ to maintain perceptual and pleasing tone uniformity. So, the first gamma compressed the signal in a capture device, while the second gamma effectively decompressed the corresponding image (Fig. 18), keeping it perceptually similar to the original scene. It is the second gamma that we are mostly concerned with when processing and manipulating digital images. Figure 18 depicts the typical display gamma relationship between digital RGB values (normalized at x-axis) and the luminance output (red curve). Once the input digital counts are linearized, linear relationship between input and luminance becomes apparent (blue line). In general, linearization can be achieved by any of the mathematical models described in the next section or by canceling out the gamma and 1/gamma curves shown in Figures 17, 18 (red lines). An example of such cancellation is the so called overall system gamma (viewing gamma) that can be also computed by multiplying the camera gamma by the display gamma (γ_overall = γ_camera * 1/γ_display ~ 1). In reality, this overall gamma is typically in range of 0.96-1.30. The simple camera gamma is equal to 1/2.2 = 0.4545. Display gamma is gamma that we are concerned with when setting the calibration target. For Windows system its value is typically 2.2. It is important to note that gamma only affects middle tones and has negligible effect on dark or white areas.

| Figure 18: OETF curve and its linearized form |

|---|

|

Unfortunately, the term gamma is used rather ambiguously and it is always necessary to verify how the particular gamma is defined. For display devices which we are most interested in, the correct term for (or meaning of) gamma is the tone reproduction curve (TRC). It is also known as the optoelectronic transfer function (OETF) and in general, it describes the relationship between the signal sent to a display (from the video frame buffer ![]() ) and the radiant output produced by each RGB channel. This relationship is usually nonlinear (as discussed above) and must be somehow modeled or predicted in order to characterize any particular display device. Technically, for LCD displays, this relationship is not an easy function, but rather results of curve-fitting to representative input points that are stored in profile or calibration lookup table (LUT). This LUT typically involves 16-bit data mapping. Strictly speaking, it follows that there is no "gamma" for LCD displays as there is no "power function" physics behind the LCD luminance output. While electron guns in CRTs have smooth power-function characteristics along the whole tonal range of each RGB component, the native response of LCD is closer to a sigmoidal shape (Karim).

However, LCD displays still have the tone reproduction curve! As for the CRT monitors, such transfer function describes the relationship between the digital input, given by the RGB values, and the luminance produced by each RGB channel. Since images should look very similar regardless of the display type, LCD manufacturers build correction tables into the display circuitry to account for gamma-corrected images. Hence, LCD displays show a response similar to a CRT gamma function !!

) and the radiant output produced by each RGB channel. This relationship is usually nonlinear (as discussed above) and must be somehow modeled or predicted in order to characterize any particular display device. Technically, for LCD displays, this relationship is not an easy function, but rather results of curve-fitting to representative input points that are stored in profile or calibration lookup table (LUT). This LUT typically involves 16-bit data mapping. Strictly speaking, it follows that there is no "gamma" for LCD displays as there is no "power function" physics behind the LCD luminance output. While electron guns in CRTs have smooth power-function characteristics along the whole tonal range of each RGB component, the native response of LCD is closer to a sigmoidal shape (Karim).

However, LCD displays still have the tone reproduction curve! As for the CRT monitors, such transfer function describes the relationship between the digital input, given by the RGB values, and the luminance produced by each RGB channel. Since images should look very similar regardless of the display type, LCD manufacturers build correction tables into the display circuitry to account for gamma-corrected images. Hence, LCD displays show a response similar to a CRT gamma function !!

X = R . Xr,max + G . Xg,max + B . Xb,max

Y = R . Yr,max + G . Yg,max + B . Yb,max

Z = R . Zr,max + G . Zg,max + B . Zb,max(T-1)

(T-2)

(T-2)Linearization:

During the image processing and color space transformations that involve device independent color spaces, a linear relationship between image pixel values specified in software and the luminance has to be established. We already know that monitors (CRT, LCD) will have a nonlinear response. The luminance can be generally modeled using a power function with an exponent, gamma, as in eq. (II) (simple gamma). During all these operations, luminance and RGB digital counts (values sent to a monitor) have to be normalized to values between 0 and 1. Again, in order to display image information as linear luminance we need to modify the RGB dc domain (i.e., we have to linearize it and thus remove the gamma encoding). As discussed in the previous paragraph, this need comes from display systems where the camera and displays had different transfer functions (which, unless corrected for, would cause problems with tone reproduction).

Simple gamma correction is given by the following equation:

| R,G,B = dcγ | (II) |

Other (and more accurate) models include several parameters and nonlinear curve fitting to a power function. Models such as GOG or GOGO are successfully used to characterize CRT monitors. Briefly, the GOG (gain-offset-gamma) model uses the formula:

| O = (a.I+b)γ | (III) |

while GOGO model (model recommended for CRT colorimetry) adds additional offset term:

| O = (a.I+b)γ+ c | (IV) |

Another model used in many RGB color-encoding standards (essentially the GOG) is expressed as:

| O=[(I+b)/(1+b)]γ | (V) |

and uses only one constant term for the gain and offset. O refers to linearized R,G, or B values and I is the digital count input normalized to <0-1>. Parameter a is an offset ("brightness" on CRTs), b is gain ("contrast" on CRTs), c is another offset, and γ is the power function coefficient. By formally substituting a for 1/(1+b) and c for b/(1+b) in eq. III, we arrive to the eq. V. This particular linearization model is used in GammaCalc script to fit your experimental data and calculate the corresponding gamma.

To summarize, conversion of R'G'B' image pixel values to the CIEXYZ tri-stimulus values can be achieved via a two stage process.

- Firstly, we need to calculate the relationship between input image pixel values (dc) and the displayed luminous intensity (Y). This relationship is the transfer function, often simplified to gamma. The transfer functions will usually differ for each channel so they are best measured independently. A note on the use of tristimulus values (XYZ) for gamma assessment: while luminance of each channel is described by the corresponding Y-component of the XYZ triplet, calculated gamma is the same regardless of which tristimulus component was used (providing the component was normalized in the range of <0,1>). Thus the red channel gamma can be calculated from normalized dc values and the X-component of XYZ, green channel would use Y-component and blue channel the Z-component. This approach is basically building one-dimensional look-up tables where luminance is substituted by a radiometric scalars (R,G,B) according to: R = LUT(dcr) (for the red channel).

- The second stage is to convert between the displayed red, green and blue to the CIE tristimulus values. This is most easily performed by using a matrix transform of the following form: [XYZ]T = [3x3 matrix] * [RGB]T where X, Y, Z are the desired CIE tri-stimulus values, R, G, B are the RGB values obtained from the transfer functions (now linearized) and the 3x3 matrix contains the measured CIE tri-stimulus values for monitor's three channels at the maximum output.

(top)↑

Practical Applications:

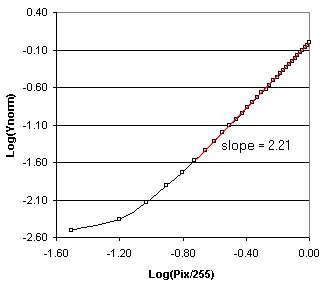

In a typical characterization of displays, commonly used technique for monitor gamma assessment uses the so called "log-gamma". This gamma is based on the original gamma definition, i.e., the slope of the "linear part in the tone characteristics obtained by linear regression in the logarithmic domain". Unfortunately, such gamma cannot be defined unambiguously since the slope depends on which part of the curve is chosen to be linear. Besides that, only the simple gamma model (II) is assumed, that is: Y = dcγ + kTransformation into logarithmic domain leads to: log(Y) = γ * log(dc) + k1 which is a linear equation with the slope being equal to gamma (γ). Note that no linearization of R'G'B' values was needed, although the non linearity at the dark are is clearly evident (Fig. 19). Overall, this method is still the fastest and relatively accurate way to assess the monitor gamma. To measure grayscale gamma, one usually displays a series of gray patches from RGB=0 to RGB=255 (e.g. in steps of 17) and measures the luminance response as the Yr,g,b component of the tristimulus values (it is the middle Y in the XYZ output). Both R'G'B' and Y values are then normalized to fit within the range of (0-1) by dividing them by 255 and Ymax, respectively. Logarithm is calculated for both series and plotted as y=log(Y/Ymax) against x=log(R'G'B'/255).

| Figure 19: Example of γ calculation |

|---|

|

Slope of the linear portion of this plot is taken as the overall system gamma (red line in Figure 19). If you would rather skip the math part, here is the spreadsheet that calculates gamma for you in this simple situation. Alternatively, to have log-gamma calculated for you, upload measured values through the GammaCalc page.

Unfortunately, as mentioned above, the OETF characteristics of LCDs are such that a single analytic equation cannot be used to accurately describe their general behavior. Consequently, equations such as eqs. (II-V) may poorly describe the OETF. However, since all computer-controlled systems include video look-up table, three one-dimensional (1D) look-up tables (one for each channel) can be obtained to define the OETF (see Display Color Management). When the GOG functions are replaced with simple one-dimensional LUTs to characterize the display's electro-optical transfer functions, the characterization performance is excellent.

(top)↑

L-star curve:

Some profiling software packages feature several settings for the TRC curve:

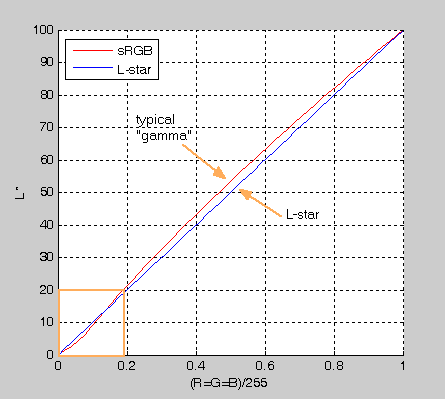

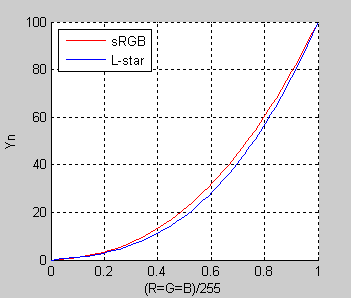

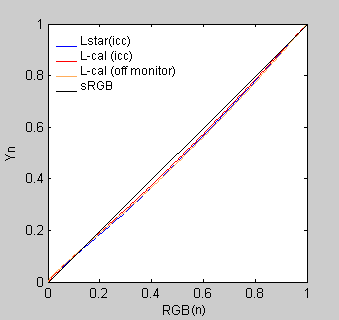

There is not much one can find on the L* curve and it seems that some vendors use proprietary algorithms for the L-star calibration curve and display icc profiles. To get at least a qualitative picture of how the L-star calibration curve looks like, Figure 20 shows the TRCs for both the sRGB and L-star curves in the form of Y(normalized) vs. R'G'B'(normalized) plot - in this section denoted as RGB.

| Figure 20: sRGB and L-star TRCs (Yn vs. RGBn) |

|---|

|

Relative luminance data (Y) were obtained from the icclu utility of Argyll CMS for both the sRGB ![]() and L-star icc profiles. This utility uses the corresponding icc/icm profiles to output XYZ tristimulus or L*a*b* values for batches of input RGB device values. ColorThink Pro can also do the job, although the output data is rounded to only two decimal places.

and L-star icc profiles. This utility uses the corresponding icc/icm profiles to output XYZ tristimulus or L*a*b* values for batches of input RGB device values. ColorThink Pro can also do the job, although the output data is rounded to only two decimal places.

Note: It should be stressed at this point that sRGB and L-star icc profiles are just color space profiles, theoretical constructs that have nothing to do with display profiles created during calibration/profiling process. As such, these color space profiles could be considered as ideal examples to demonstrate the characteristics of display gamma and L-star profiles.

Visual inspection of Fig. 20 suggests that L-star curve brings more contrast (steepness, higher gamma) into the midtones and highlights (RGB 0.5-0.9). We will also see later that value of gamma is not constant and that it varies along the whole tonal range. Curve characteristics in shadows (< 0.25) are not that clear and additional analysis has to be performed. Overall, both TRC curves are quite similar with no distinct features.

An alternative way to arrive to the similar picture is to calculate luminance values (Y) for the L-star curve from the corresponding values of the CIELAB L-channel (0-100). However, it should be mentioned that typical L-star curve is not identical to the CIELAB L-channel.

Here is the formula for L* to Y transformation: Y = 100 . [(L*+16)/116]3 for Y/100 > 0.008856, Y ∈ <0-100> and Y = 100 . L*/903.3 for Y/100 < 0.008856 Another characteristic plot is shown in Figure 21. Relationship between calculated CIELAB L*-values and the RGB values for a 5-step gray ramp is plotted as L* vs. normalized input RGB. Same ideal sRGB and L-star icc profiles were used, only this time the icclu utility was configured to output L*a*b* values. As one can see, the L-star response is clearly linear in all brightness ranges (blue line). This means that doubling the value of RGB always changes value of L by the factor of two or that by stepping the RGB values by e.g., 10 points will change the L values by a constant increment (in this case by 100/255*10 = 3.9). Also, since incremental changes in L are perceptually uniform, changes from dark to

Figure 21: L-star and ideal sRGB plots

bright values in a synthetic grayscale (RGB form 0-255) will be perceived as smooth and uniform. On the other hand, the sRGB curve (or any other typical calibration gamma curve) results in brighter midtones (red curve). Changes of the same 10 RGB points will be perceptually different in shadows, midtones and highlights. For typical calibration gamma curves (2.2, 1.8, sRGB), the shape of the curve in shadows (orange rectangle) will vary depending on monitor black level and on how the calibration algorithm treats the curve in dark areas. Both the linear and typical gamma curves will usually have non-zero luminance at the black while only typical gamma curves may have a higher contrast in that region. Clear distinguishing feature is a convex character of the typical gamma curve in region from about R'G'B'=80 to R'G'B'=200. Real experimental L-star curves are nearly linear.

Before we continue with more detailed analysis, some assumptions have to be made. As we have discussed earlier, LCD display has no underlying physics to follow the power law dependence of luminance vs. digital input. However, we also pointed to the fact that manufacturers build corrections into the display circuitry of the LCD panel to approximate the power function dependence. Such corrections may justify use of the gamma concept. Thus, if the power law dependence is adopted, logarithm of Yn vs. RGBn will be a linear function. Indeed, we often see very good linearity at higher luminance values (~ RGB > 160) as shown earlier in Fig. 19. Unfortunately, for midtones and shadows, this linear relationship (i.e. constant gamma) breaks down. To ensure high accuracy, examples in this section are based on calibrations of higher end Eizo CG19 display using ColorNavigator. Calibration software adjusts only the monitor 10-bit LUT without adding any corrections to the 8-bit video card LUT.

(top)↑Let's evaluate four different approaches to characterization of TRC curves.

- First approach assumes that the power law dependence is obeyed for both curves (gamma-based and the L-star) and that gamma can be expressed as a power coefficient in general formula (a*xγ + c) or as the slope in the log-log plot in any part of the RGB brightness scale (R=G=B=0 -> R=G=B=255).

- Second approach to TRC analysis is based on polynomial or spline fit to Yn vs. RGBn data points followed by analysis of the fitted function. While this approach seems the most rigorous, it is at the same time the least intuitive and generizable. We would be looking for parameters that uniquely describe the TRC, such as characteristic points and shapes of the n-th derivative of the fitted function (which is still polynomial function). When the same polynomial fit (I used 6th degree polynomial) is done on the log(Yn) vs. log(RGBn) scale, situation is very close to analyzing any log-log diagram such as in Fig. 19. Since the log-log plot should be mostly linear (at least in highlights), the tangent line to it at any point gives the best linear approximation to our fit function. Hence the first derivative of the fit function would give us the slope of the linear portion of the fitted curve, i.e. the gamma. This is of course a simplification, though good enough to analyze gamma and L-star curves.

- Third method is based on comparison against a constant (reference) initial curve such as the one used in gamma encoded sRGB image. This is a reasonable starting point considering that digital cameras frequently transform raw images into the sRGB (or AdobeRGB) color space. Any display TRC curve would then be canceling the encoding gamma curve to provide the resulting TRC. Assuming LUT gamma = 1, resulting TRC would be very close to the real overall TRC curve (approximately linear).

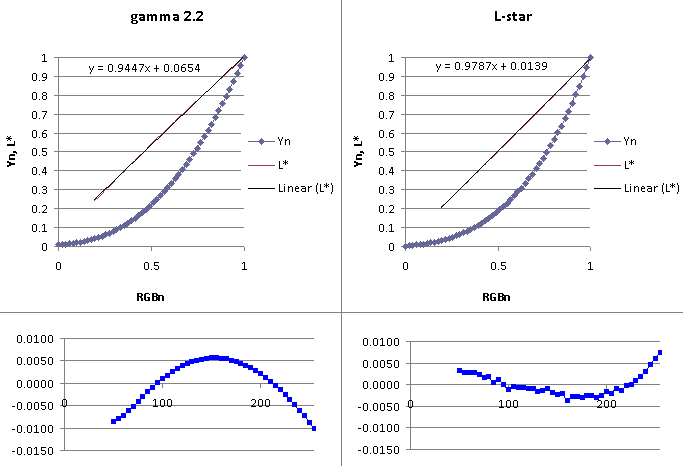

- Last method illustrates very simple and reliable test for gamma or L-star curves. Plot of Yn vs. RGBn is generated and CIELAB L*- lightness values are calculated from the measured Yn. Linear regression is performed on the newly created function: L* = f(RGBn). L-star curves are supposed to simulate response of the CIELAB L*-channel while the gamma curves are not. Thus fit to the L-star curve would ideally be a line with very small SSE value (sum of squares due to error). On the other hand, gamma curves (after the same Y to L* transformation) should result in poor linear fit with higher SSE. Analysis of residuals provides additional information on goodness of the fit. This approach is qualitatively depicted in Fig. 24.

Method 1:

Two types of data were analyzed - experimentally obtained data for both the L-star and the gamma 2.2. calibrated monitor (Tables 3, 5) and ideal color space profile data for both types of curves (Tables 2, 4). For both the sRGB and L-star profiles, relative luminance data (Y) were obtained from the icclu utility of Argyll CMS . It is the same data and curves as in Fig. 20. More specifically, a grayscale RGB ramp (in increments of 5) was used as the input, run through the icc profile to get the corresponding XYZ tristimulus data. Y-component of the tristimulus output and the input RGB data were used in the curve fitting examples. The digital input range (0-255) was divided into smaller subranges as shown in Tables 2 to 5 (column 1). Data points in each subrange were fitted to a general power function and the power coefficient γ was recorded in column 2. Root mean squared error (the square root of the mean square error or

| RGB (8-bit) | a*xγ+c | RMSE | log-log(γ) | RMSE |

|---|---|---|---|---|

| 0-255 | 2.53 | 3.50E-3 | 2.55 (>210) | 3.13E-4 |

| 200-255 | 2.69 | 3.95E-5 | 2.55 | 3.89E-4 |

| 150-200 | 2.62 | 2.48E-5 | 2.44 | 7.50E-4 |

| 100-150 | 2.49 | 4.67E-5 | 2.27 | 1.80E-3 |

| 50-100 | 2.25 | 8.27E-5 | 1.95 | 6.00E-3 |

| 0-50 | 1.56 | 4.07E-4 | 1.14 | 3.70E-2 |

| 0-25 | 1.00 | 6.39E-5 | 1.00 | 2.40E-3 |

| RGB (8-bit) | a*xγ+c | RMSE | log-log(γ) | RMSE |

|---|---|---|---|---|

| 0-255 | 2.56 | 5.69E-3 | 2.63 (>210) | 1.20E-3 |

| 200-255 | 3.05 | 7.39E-4 | 2.60 | 1.61E-3 |

| 150-200 | 2.67 | 4.79E-4 | 2.39 | 1.54E-3 |

| 100-150 | 2.44 | 3.20E-4 | 2.18 | 1.75E-3 |

| 50-100 | 2.15 | 1.79E-4 | 1.82 | 4.76E-3 |

| 0-50 | 1.62 | 3.34E-4 | 0.89 | 6.20E-2 |

| 0-25 | 1.16 | 1.06E-4 | 0.69 | 3.36E-2 |

the standard error) is shown in column 3. Linear regression was performed on the log-log scale for the same data points and slope of the fitted line (γ) is listed in column 4. This would correspond to the so called log-log gamma. The corresponding RMSE data are shown in column 5. Gamma values calculated in columns 2 and 4 will obviously be different. It should not matter which definition of gamma we choose as long as we stay consistent. Remember, the log-log(γ) is the definition used in display calibration where linear regression is done in the logarithmic domain.

| RGB (8-bit) | a*xγ+c | RMSE | log-log(γ) | RMSE |

|---|---|---|---|---|

| 0-255 | 2.25 | 1.55E-3 | 2.24 (>160) | 5.94E-4 |

| 200-255 | 2.32 | 1.10E-5 | 2.26 | 1.62E-4 |

| 150-200 | 2.29 | 1.03E-5 | 2.22 | 2.92E-4 |

| 100-150 | 2.25 | 1.80E-5 | 2.15 | 7.70E-4 |

| 50-100 | 2.17 | 2.96E-5 | 2.02 | 3.38E-3 |

| 0-50 | 1.87 | 1.87E-3 | 1.37 | 5.78E-2 |

| 0-25 | 1.07 | 4.80E-4 | 1.14 | 2.86E-2 |

| RGB (8-bit) | a*xγ+c | RMSE | log-log(γ) | RMSE |

|---|---|---|---|---|

| 0-255 | 2.26 | 1.53E-3 | 2.21 (>160) | 9.29E-3 |

| 200-255 | 2.32 | 1.36E-5 | 2.23 | 2.41E-4 |

| 150-200 | 2.30 | 7.88E-6 | 2.17 | 3.83E-4 |

| 100-150 | 2.26 | 1.40E-5 | 2.05 | 1.49E-4 |

| 50-100 | 2.17 | 5.12E-5 | 1.76 | 5.72E-3 |

| 0-50 | 1.84 | 1.65E-4 | 0.56 | 6.48E-2 |

| 0-25 | 1.38 | 2.06E-4 | 0.37 | 3.68E-2 |

Following are some observations made from Tables 2-5:

- For the L-star curves, trends in γ values are approximately the same for both the power function and the "log-log" definitions. They decrease gradually with decreasing RGB input. Values in yellow indicate poor fit to the data. Log-log scale shows particularly poor linear fit in shadows.

- L-star curves have variable γ across the whole RGB input range. The log-log(γ) fit is about 2.5-2.6 when maximum linear part is considered (RGB > 210). In RGB range of <50-100>, the L-star gamma is about 1.8-1.9, in RGB <100-150> ~ 2.2-2.3, in RGB <150-200> ~ 2.4, and RGB <200-255> ~ 2.6. Closer to the black point, log-log(γ) still decreases with linear fit getting very poor.

- For the "classical' gamma based curves, trends in γ values are very similar for both the power function and the "log-log" definitions. Log-log(γ) falls off faster for the display curves.

- The log-log(γ) of gamma curves is about 2.2 when maximum linear part is considered (RGB > 160). The log-log(γ) stays around 2.0-2.2 from highlights to midtones. Closer to the black point, γ decreases with log-log linear fit getting again very poor.

Method 2:

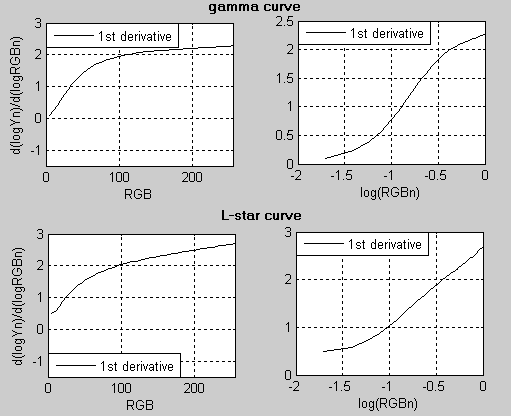

To further assess differences between the two calibration curves, relative luminance values (Y ∈ <0-100>) were either measured or calculated using the icclu CMS utility. Polynomial fit was done on the data (linear and log-log scale) followed by the plot and data analysis. In general, the first derivative of the fitted function (logYn vs. RGB or logYn vs. logRGBn ) reflects well the type of the TRC curve. Plots of d(logYn)/d(logRGBn) vs. RGB and d(logYn)/d(logRGBn) vs. logRGBn are shown in Fig. 22. L-star curve has extensive linear parts in the logRGBn plot and continuously decreasing "gamma" in the RGB plot. On the other hand, the plot of "classical" gamma curve has a typical S-shape in the logRGBn plot while the RGB plot shows more constant gamma in highlights. However, as indicated earlier, more data is needed to formulate any general characteristics from these plots. Due to the larger size of the images, the corresponding analysis is available in this document (v. 1.0) (check here for the latest version).

| Figure 22: 1st derivatives of logYn vs. RGB or logRGBn for L-star and gamma TRCs |

|---|

|

Method 3:

This method provides only a qualitative comparison between two or more TRC curves. All are subtracted from a reference TRC (in our case the ideal camera sRGB TRC curve). When display TRC curve is also sRGB, a straight line from 0 to 1 will

| Figure 23: L-curves subtracted from camera sRGB γ |

|---|

| (place mouse over the image to toggle) |

|

result from the subtraction. This is the case shown in Fig. 23 (black diagonal line). Other three lines are TRCs of the L-star curves relative to sRGB curve. Blue line shows an L-star curve coming from the L-star icc profile, the red line is an L-star curve coming from the icc profile of calibrated/profiled monitor, and the orange line is the experimentally measured TRC based on the same icc profile. Measurement was done directly off the screen using 5-step gray ramp. In general, the L-star curves make midtones slightly darker than the typical gamma based TRCs. We have already seen the same trend in Fig. 21. For more detailed inspection of these curves, place mouse over the image in Fig. 23. On the toggled image, differences from the sRGB TRC are exaggerated four times. As one can see, the major differences from "classical" gamma curves are in midtones, specifically in R'G'B' ranges of 25-100 (8-bit encoding). It is mostly the curve steepness (contrast) that differentiates the curves from each other.

(top)↑The relationship between measured tristimulus Y-values (normalized) and RGB normalized values for a 5-step gray ramp is plotted in Fig. 24 as Yn vs. RGBn. Left panel shows data obtained from L-star calibrated Eizo CG19 monitor, the right panel shows data obtained from similar gamma 2.2 calibration both using ColorNavigator and the X-rite DTP-94 colorimeter. In both cases, the Y-values were also transformed into L* values of the CIELAB color space.

Here is the formula for Y to L* transformation: L* = 116 . (Y/100)1/3 - 16 for Y/100 > 0.008856, Y ∈ <0-100> and L* = 903.3 . Y/100 for Y/100 < 0.008856 Least squares fitting method was used to fit a line to the L* vs. RGBn function. Fitted straight line shown in the right panel (in black) is nearly identical to the original L* vs. RGBn function. On the other hand, the linear regression performed on the "classical' gamma curve (left panel) shows hints of deviation from the straight line. The corresponding sums of squares due to error (SSEs) are 0.0003 (L-star) and 0.0010 (gamma 2.2.). Analysis of residuals reveals further details of the fit. While residuals for the L-star curve have generally monotonic concave character, residuals of the gamma curve have a characteristic convex shape typical for other measured or theoretical gamma curves. Due to the uncertainty of curve behavior in the dark areas, evaluate only parts of the plot starting from about R'G'B'=50 (RGBn=0.2) and up. Here is the Excel worksheet that performs all the calculations.

| Figure 24: Linear fit to L* vs. RGB for gamma and L-star TRCs with plotted residuals |

|---|

|

(top)↑Links and References:

General references:

- H.R. Kang, Computational Color Technology, SPIE Press, Bellingham, Washington USA (2006)

- B.A. Wandell, L.D. Silverstein, Digital color reproduction, The Science of Color, pp.281-316 (2003)

- G. Wyszecki, W.S. Stiles, Color Science, Concepts and Methods, Quantitative Data and Formulae (2nd Edition), (1982)

- M. A. Karim, Ed., Electro-Optical Displays. New York: Marcel Dekker, 1992.

- Linkwitz Lab - Digital Process Photo (A/D conversion)

Charles Poynton:

Other Gamma links:

- A comparison of four multimedia RGB spaces - pdf (Danny Pascale)

- Monitor calibration and gamma (Norman Koren)

- Gernot Hoffmann (computer vision section - gamcurve26082001.pdf, gamquest18102001.pdf, measgamma10022004.pdf, optigray06102001.pdf)

- Gamma correction (Wikipedia)

- Calibrating monitor gamma (Tom Niemann)

- Computer Graphics Systems Development (CGSD) on Gamma

- Gamma Correction and Precision Color at libpng.org

- L* gamma

- Links browser Gamma Calibration page

- Monitor Calibration Wizard

- Advanced Gamma Corrector

- Epaperpress.com (monitor calibration, gamma check)

General:

Links ICC:

- Andrew Shepherd's ICC Profile Toolkit - use to change the name of icc profile

- Introduction to digital colour management (collection of links to different topics)

- iccView (Tobias Huneke)

Last update : April 15, 2009